Lean service architectures with Java EE 6

Elements and patterns of a lean SOA. Although Java EE 6 is far less complex than previous platform versions, it can still be misused to create exaggerated and bloated architectures. In this article, author delineates the essential ingredients of a lean service-oriented architecture (SOA), then explains how to implement it without sacrificing maintainability

The complexity and bloat often associated with Java EE are largely due to the inherent complexity of distributed computing; otherwise, the platform is surprisingly simple. As I discussed in my last article for JavaWorld, Enterprise JavaBeans (EJB) 3.1 actually consists of annotated classes and interfaces that are even leaner than classic POJOs; it would be hard to find anything more to simplify. Nonetheless, (mis)use of Java EE can lead to bloated and overstated architectures. In this article, I discuss the essential ingredients of a lean service-oriented architecture (SOA), then explain how to implement one in Java EE without compromising maintainability. I'll start by describing aspects of SOA implementation that lend themselves to procedural programming, then discuss domain-driven (aka object-oriented) design.

SOA: The essential ingredients

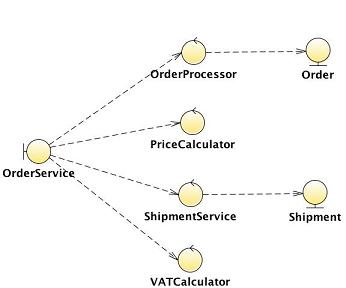

The crucial artifact in any SOA is an exposed service. A service can be considered as a contract with significant business value. A clear relationship should exist between the service contract and a business requirement, a use case, or even a user story. A service can be realized as a single action or a set of cohesive actions. For example, an OrderService might comprise a single action that performs the actual order, or a group of related operations that also include canceling the order and receiving the order status. An SOA does not reveal any details about how a service must be implemented; it aims for services to be technology- and even location-agnostic.

SOA principles can be mapped to Java EE artifacts. The most important ingredients in a Java-based SOA implementation are interfaces and packages. Everything else is only a refinement, or design. Only one artifact in the language -- a plain Java interface -- meets the requirements of a service contract. It is often used to decouple the client from the service implementation. It can also be used to expose the functionality of a component.

Component based design (CBD) is SOA's evolutionary predecessor. Component contracts are comparable to SOA's services, except for their heavier dependence on particular technology and general lack of operations-related metadata -- such as service-level agreements (SLAs) -- or strong governance principles. A component is built on the maximal cohesion, minimal coupling principle. A component should be highly independent of other components, and the implementation should consist of related (cohesive) elements. In a Java EE SOA implementation, a component is a Java package with these strong semantics. The package's functionality is exposed with a single interface, or in rare cases a few interfaces.

And the essential complexity

SOA implies distribution of services, which is not always necessary or desirable in a Java EE application. It would be foolish to access a local service remotely just to satisfy some high-level SOA concepts. Direct local access should always be preferred over remote services. A local call is not only orders of magnitude faster than a remote one; the parameters and return values can also be accessed "per reference" and need not be serialized.

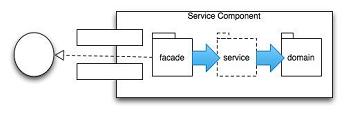

Whether the service is local or remote, the business logic should always be executed consistently. A component needs a dedicated remoting and transaction boundary interface, which acts as a gatekeeper (see Figure 1). The main responsibility of such a facade is to keep the granularity of the methods coarse and the persistent state of the component consistent.

Figure 1. Remoting/transactions boundary

You can achieve the right granularity only by carefully crafting the interface so that consistency can be easily ensured with built-in transactions. Listing 1 shows how to configure declarative transactions and expose a remote business interface.

Listing 1. Declarative transaction and remoting declaration

| package ...bookstore.business.ordermgmt.facade;

@Stateless |

Applying the @TransactionAttribute(TransactionAttributeType.REQUIRES_NEW) annotation to the class causes all methods to inherit this setting automatically. You could, alternatively, rely on the default (which is TransactionAttributeType.REQUIRED) and not specify a transaction setting. However, the OrderService is the transaction and remoting boundary. It is accessed exclusively by the UI, which must not start any transactions. Using the REQUIRES_NEW attribute is more explicit. It always starts a new transaction, which is what you would expect from a boundary. The REQUIRED configuration, if it were invoked without a transaction context, would reuse an existing transaction or start a new one. A boundary, however, will never be invoked in an existing transaction, which makes the default setting more confusing.

The @Remote annotation is applied to the bean class -- not the business interface -- which may look strange at the first glance. The business interface does not follow coding conventions either: its name doesn't contain the Remote suffix. As a result, the service consumer sees only a plain old Java interface and is not directly aware of using an EJB. Only an indirect dependency on unchecked exceptions is present. If you are building a Web application, the OrderServiceBean would be exposed with a local business interface only, whereas a rich client runs in a separate process and requires a remote interface. In either case there is no need to further emphasize the business interface's distributive capabilities. It gets injected or looked up, and the consumer is only interested in the functionality and not its remote visibility.

Separation of concerns, or divide and conquer

Listing 1's OrderServiceBean emphasizes the business logic and hides the component's implementation. These are the responsibilities of a typical Facade pattern. Furthermore, the OrderServiceBean ensures the component's consistency by starting a transaction before every method is exposed over the business interface. Transactions are a cross-cutting concern -- an aspect -- already implemented by the EJB container. Implementing both the controller logic and the actual business logic would be too much responsibility for a single facade.

The intention of a service is straightforward -- it is the realization of the business logic. In the SOA world it has rather procedural nature. A service resides behind the facade, so it can never be accessed directly by the UI or other presentation components. A service is stateless and can be only called by the facade. Every facade's method starts a new transaction, so a service can safely rely on the transactions' existence. Listing 2 shows the transaction and remoting configuration of a service.

Listing 2. Structure of a service

| @Stateless @Local(OrderProcessor.class) @TransactionAttribute(TransactionAttributeType.MANDATORY) public class OrderProcessorBean implements OrderProcessor { @PersistenceContext private EntityManager em; public Order processOrder(int customerId, int productId, BigDecimal price) { |

In Listing 2, the concerns are clearly separated. The facade provides the cross-cutting functionality and plays the controller role, and the service focuses on the actual domain-logic implementation. The clearly separated roles and responsibilities make it possible to predefine a service's structure and configuration easily. A service is a stateless session bean with local business interface. It is always invoked by the facade in a transaction, so it can be deployed with the MANDATORY setting. This restrictive TransactionAttribute further emphasizes the encapsulation; it is not possible to call it directly without a transaction. The bean implementation exposes the business interface with the @Local annotation, so the interface is independent of the EJB API.

Domain objects or structures?

Since services implement the actual business logic, and the facade cares about the cross-cutting concerns, there is no more functionality left to be implemented in the domain objects. The Java Persistence API (JPA) entities consist only of annotated attributes and getters/setters; they contain no business logic, as you can see in Listing 3.

Listing 3. The DAO implementation

| @Entity @Table(name="T_ORDER") @NamedQueries({ @NamedQuery(name="",query="SELECT o FROM Order o where o.customerId = :customerId") }) public class Order { public final static String PREFIX ="..ordermgmt.domain.Order"; @Id public Long getId() { public double getPrice() { public void setPrice(double price) { public int getProductId() { public void setProductId(int productId) { |

Although the anemic object model is considered to be an antipattern, it fits very well into a SOA. Most of the application is data-driven, with only small amount of object-specific or type-dependent logic. For simpler applications, anemic JPA entities have advantages too:

Dumb entities are easier to develop. No domain knowledge is required.

Anemic JPA entities can be generated more easily. The necessary metadata can be entirely derived from the database. You can just maintain the database, and the existing entities can be safely overwritten by newly generated ones.

The persistent entities can be detached and transferred to the client. Aside from the classic "lazy loading" challenges, there are no further surprises. The client will only "see" the accessors and will be not able to invoke any business methods.

Thinking about internal structure

I've already identified some key ingredients of a service-oriented component:

Facade: Provides simplified, centralized access to the component and decouples the client from the concrete services. It is the network and transaction boundary.

Service: The actual implementation of business logic.

Domain structure: This is a structure rather than an object. It implements the component's persistence and exposes all of its state to the services, without encapsulation.

You will find these three layers in most components. The component structure manifests itself as internal packages named by the key ingredients. The ordermgmt component consists of the facade, service, and domain packages, as shown in Figure 2.

Figure 2. Internal component layering with packages

This convention not only standardizes the structure and improves maintainability, but also allows automatic dependency validation with frameworks like JDepend, Checkstyle, Dependometer, SonarJ, or XRadar. You can even perform the validation at build time. If you do, the continuous build would break on violation of defined dependencies. The rules are clearly defined with strict layering: a facade may access a service, and the service a domain object, but not vice versa.

DAOs: Dead or alive?

The original context of a Data Access Object (DAO), as defined in the Core J2EE pattern catalog (the emphasis is mine), is:

Access to data varies depending on the source of the data. Access to persistent storage, such as to a database, varies greatly depending on the type of storage (relational databases, object-oriented databases, flat files, and so forth) and the vendor implementation.

The motivation for this pattern was the desire to achieve greatest possible decoupling from the concrete realization of the data-store mechanics.

The question to ask is how often you've had a concrete requirement to switch among "relational databases, object-oriented databases, flat files, and so forth" in a real-world project. In the absence of such a requirement, you should ask yourself how likely such a replacement of the data store really is. Even if it is likely to happen, data-store abstractions are rather leaky. JPA's EntityManager is comparable to a domain store and works with managed entities. Other data stores are more likely to use the "per value" approach and so return a new copy of a persistent entity on every request in a single transaction. This is a huge semantic difference; with the EntityManger, there's no need to merge attached entities back, whereas it is absolutely necessary in the case of a DAO with data-transfer objects

Even a perfect database abstraction is leaky in practice. Different databases behave differently and have specific locking and performance characteristics, in particular. An application might run and scale perfectly on one database but encounter frequent deadlocks with another product. No abstractions or design patterns can neutralize such variations. The only possible way to survive such a migration is to tweak the isolation levels, transactions, and database configuration. These issues can't be hidden behind a DAO interface, and it's likely that the DAO implementation would not even change. The DAO abstraction would be only useful if the actual JPA-QL or SQL statements change, which is a minor problem.

So neither the original definition nor a database-migration use case justifies the implementation of a dedicated DAO. So are DAOs dead? For standard use cases, yes. But you still need a DAO in most applications to adhere to the principles of DRY (don't repeat yourself) and separation of concerns. It is more than likely that an average application uses a set of common database operations over and over again. Without a dedicated DAO, the queries will be sprinkled through your business tier. Such duplication significantly decreases the application's maintainability. But the redundant code can be easily eliminated with a DAO that encapsulates the common data-access logic.

DAOs aren't dead, but they cannot be considered as a general best practice any more. They should be created in a bottom-up, rather than a top-down, fashion. If you discover data-access code duplication in your service layer, just factor it out to a dedicated DAO and reuse it. Otherwise it is just fine to delegate to an EntityManager from a service. The enforcement of an empty DAO layer is even more harmful, because it requires you to write dumb code for even simple use cases. The more code is produced, the more time you must spend to write tests and to maintain it.

With JDK 1.5 and the advent of generics, it is possible to build and deploy a generic, convenient, and typesafe DAO once and reuse it from variety of services. Such a DAO should come with a business interface to encourage unit testing and mocking. Listing 4 shows an example of such an interface.

Listing 4. DAO business interface

| public interface CrudService { public <T> T create(T t); public <T> T find(Class<T> type,Object id); public <T> T update(T t); public void delete(Object t); public List findWithNamedQuery(String queryName); public List findWithNamedQuery(String queryName,int resultLimit); public List findWithNamedQuery(String namedQueryName, Map<String,Object> parameters); public List findWithNamedQuery(String namedQueryName, Map<String,Object> parameters,int resultLimit); } |

However, the business interface is optional in EJB 3.1. The DAO interface must be executed only in the context of an already existing transaction, so it is @Local and comes with the MANDATORY transaction setting

Instead of polluting this POJI with a @Local annotation, you expose it by the session bean implementation instead. The bean implementation itself delegates to the EntityManager only in simple cases. You should increase the DAO's value and encapsulate reusable, project-specific queries in it. Listing 5 shows a generic DAO, realized as a service.

| @Stateless @Local(CrudService.class) @TransactionAttribute(TransactionAttributeType.MANDATORY) public class CrudServiceBean implements CrudService { @PersistenceContext EntityManager em; public <T> T create(T t) { this.em.persist(t); this.em.flush(); this.em.refresh(t); return t; } @SuppressWarnings("unchecked") public void delete(Object t) { public <T> T update(T t) { public List findWithNamedQuery(String namedQueryName){ public List findWithNamedQuery(String queryName, int resultLimit) { public List findByNativeQuery(String sql, Class type) { |

More challenging are the query methods. You can't know the number of query parameters and their names when you develop the DAO service, so you must provide them in a generic way. Listing 6 shows the creation of query parameters in a fluent way with the Builder pattern.

Listing 6. The builder for query parameters

| public class QueryParameter { private Map<String,Object> parameters = null; private QueryParameter(String name,Object value){ this.parameters = new HashMap<String,Object>(); this.parameters.put(name, value); } public static QueryParameter with(String name,Object value){ return new QueryParameter(name, value); } public QueryParameter and(String name,Object value){ this.parameters.put(name, value); return this; } public Map<String,Object> parameters(){ return this.parameters; } } |

The most suitable data structure for the transportation of query parameters is a java.util.Map, but it's rather inconvenient to create. The simple QueryParameter builder makes the construction of the query parameters more convenient and fluent. Listing 7 shows the use of the QueryParameter builder to construct the parameters.

Listing 7. The fluent way to construct a query parameter

| import static ...dataservice.QueryParameter.*;

public List<Order> findOrdersForCustomer(int customerId){ |

The static with() method creates a new QueryParameter instance. It enables a static import and makes the qualification with the QueryParameter superfluous. The and() method just puts the parameters into the internal Map and returns the QueryParameter again, which makes the construction fluent. Finally, the method parameters return only the internal Map, which can be directly passed to the CrudService.

A touch of pragmatism

Most service components are not complicated and consist mainly of basic data operations. In these cases the facade would just delegate to a service, which in turn delegates to the javax.persistence.EntityManager or a generic CrudService to perform its persistence tasks. Otherwise you will end up getting two dumb delegates: the facade would only delegate to the service, and the service to the CrudService (aka DAO). Delegates without additional responsibilities are just dead code. They only increase the code complexity and make maintenance more tedious. You could fight this bloat by making the service layer optional. The facade would manage the persistence and delegate the calls to the EntityManager. This approach violates the separation-of-concerns principle, but it's a very pragmatic one. It is still possible to factor reusable logic from the facade into the services with minimal effort.

If you really want to encapsulate the data access in a dedicated layer, you can easily implement it once and reuse the generic access in different projects. Such a generic DAO does not belong to a particular business component. It would violate the cohesion. In our case it is deployed in a technical component with the name dataservice.

Diagramming robustness

The architecture I've described in this article can be expressed with a robustness diagram consisting of Entity, Control, and Boundary (as defined on the Agile Modeling site). The original definition of the Entity-Control-Boundary (ECB) architectural pattern matches perfectly with our pattern language. A domain structure is an Entity, the Control is a service, and the Boundary is realized with a facade. In simpler cases the facade and service can collapse, and a service would be realized only as a facade's method in that case. Figure 3 shows the service component visualized with a robustness diagram:

Figure 3. The service component in a robustness diagram

We could even replace our custom naming in the internal-component layer with the names of the ECB elements. (The naming of Java EE components is mainly influenced by the Core J2EE Patterns, so the terms facade, service, and domain object are more typical in an "enterprise" Java context.)

As lean as possible, but not leaner

It would be hard to streamline the SOA architecture presented in this article further without sacrificing its maintainability. I know of no other framework that lets you express a service-based architecture in such a lean and straightforward manner. No XML configuration, external libraries, or specific frameworks are needed. The deployment consists of only a single JAR file with a short persistence.xml like the example shown in Listing 5.

Listing 5. persistence.xml -- the only XML configuration needed

| <persistence> <persistence-unit name="prod" transaction-type="JTA"> <jta-data-source>jdbc/sample</jta-data-source> </persistence-unit> </persistence> |

The whole architecture is based on few annotated Java classes with pure interfaces (that is, interfaces that are not dependent on the EJB API). With EJB 3.1 you could even remove the interfaces and inject the bean implementation directly. Services and DAOs could be deployed without any interfaces, which would halve the total number of files and make the component significantly leaner. However, you would lose the ability to mock out the service and DAO implementation easily for plain unit tests.

Theo JW